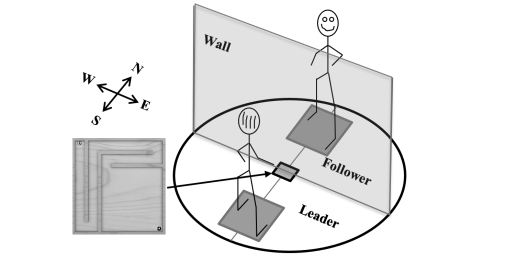

Joint Action

When two or more people aim to produce joint action outcomes they need to coordinate their individual actions in space and time. Successful joint action performance has been reported to depend, among others, on visual and somatosensory information provided to the joint actors. This study investigated whether and how the systematic manipulation of visual information modulates real-time joint action when dyads performed a whole-body joint balance task. To this end, we introduced the Joint Action Board (JAB) where partners guided a ball through a maze towards a virtual hole by jointly shifting their weight on the board under three visual conditions:

- the Follower had neither visual access to the Leader nor to the maze;

- the Follower had no visual access to the maze but to the Leader;

- the Follower had full visual access to both the Leader and to the maze.

Joint action performance was measured as completion time of the maze task; interpersonal coordination was examined by means of kinematic analyses of both partners’ motor behaviour. We predicted that systematically adding visual to the available haptic information would result in a significant increase in joint performance and that Leaders would change their coordination behavior depending on these conditions. Results showed that adding visual information to haptics led to an increase in joint action performance in a Leader–Follower relationship in a joint balance task. In addition, interpersonal coordination behavior (i.e. sway range of motion, time-lag between partner’s bodies etc.) changed dependent on the provided visual information between partners in the jointly executed task. Find this article in Experimental Brain Research

Contact: Prof. Dr. Eric Eils