Deep Learning Algorithms and Architectures

Our foundational research projects are dedicated to advancing the understanding and interpretability of deep learning, driving progress in both theoretical insights and practical applications. Some of our projects focus on empirically testing and validating predictions derived from deep learning theory, ensuring that theoretical advancements align with real-world observations. Others seek to develop novel network architectures that are not only more efficient and effective but also inherently more interpretable. By bridging theory and practice, we strive to uncover fundamental principles that enhance the reliability, transparency, and performance of deep learning models.

Related Publications:

-

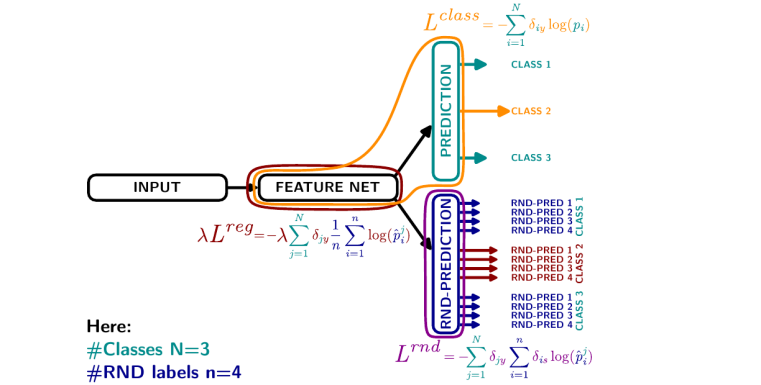

Becker, M., Risse, B. (2024) Learned Random Label Predictions as a Neural Network Complexity Metric. Workshop on Scientific Methods for Understanding Deep Learning @NeurIPS

-

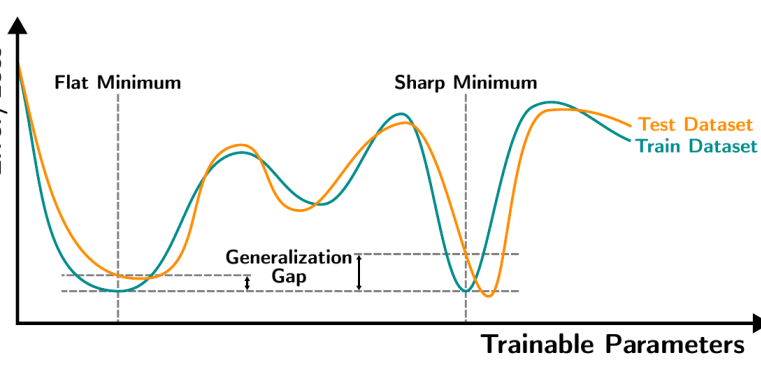

Becker, M., Altrock, F., Risse, B. (2024)Momentum-SAM: Sharpness Aware Minimization without Computational Overhead. arXiv preprint