Replication Research in Cooperation with FORRT

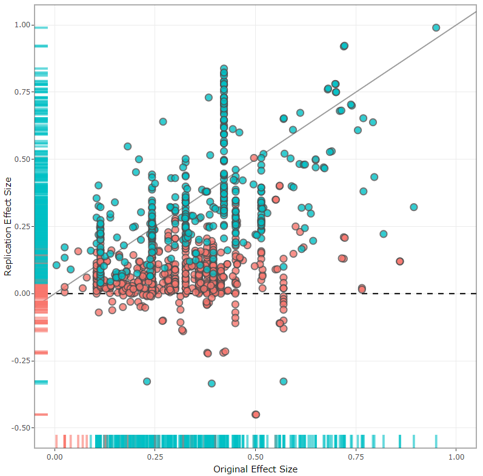

Background: The scientific record is biased towards exciting and surprising results. Replications, that is, repeated tests of published findings, have been receiving little attention. In cooperation with the Framework for Open and Reproducible Research Training (FORRT) and together a large international and interdisciplinary team of researchers, we have created the world's largest database of scientific replication studies. The findings are being analyzed live and are openly accessible. Researchers can contribute new findings or use the database for their own questions.

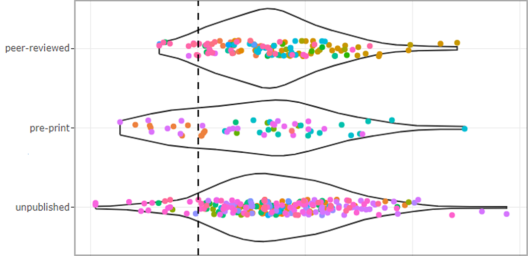

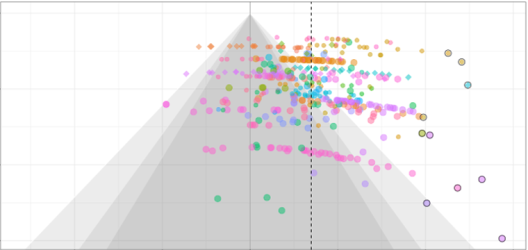

Central findings: We have been using the database to esimate replication success and found a replication rate of 50%. That is, only 5 out of 10 replication studies arrive at the same conclusions as the original studies did.

Further information: All project materials are available online.

FORRT Replication Database

Framework for Open and Reproducible Research Training