- EXTENDED DEADLINE: 8th March -- EXTENDED DEADLINE: 8th March -- EXTENDED DEADLINE: 8th March -

Target Audience

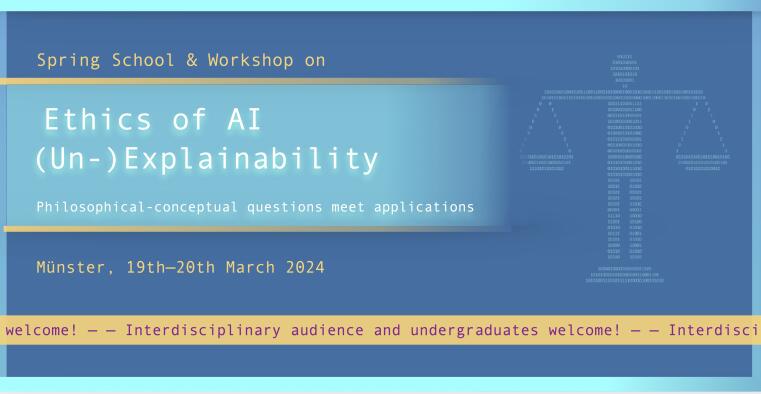

The event is aimed at both experts and an interdisciplinary audience, including students. The Spring School is designed to provide the essential technical as well as philosophical background required for the Workshop.

Scientific Scope

Usual machine learning systems are well known not to be explainable: They perform their “intelligent” task (of transforming inputs to outputs in a suitable way), but generally do not deliver explanations for these outcomes. Especially they neither provide a general rule that connects inputs to outputs nor do they indicate which features of the inputs were important for yielding the specific outputs. It is this lack of explainability that gives ML algorithms their frequently attributed black-box character.

Besides interesting epistemic questions, this unavailability of explanations becomes ethically relevant when the results of the machine learning system rather directly concern humans as in many current or envisaged applications from medical diagnosis over crime prediction to insurance premiums. In such sensitive cases, affected persons and stakeholders understandably and often legitimately demand an explanation in order to e.g. check the reliability of the possibly far reaching results, especially ruling out unfair biases, or to justify the outcomes to the persons concerned (giving them a chance to complain about or sue against the results).

The challenges of such situations are at least twofold and this workshop aims at bringing the two strands of current research into discussion. On the one hand, there are conceptual-philosophical questions concerning explanations, for instance:

- For which exact ends would one need explanations in such ethically relevant cases?

- What kind of explanations are required or desirable in which situations? Are theredomain-specific needs? What are the required features?

- What does the use of unexplainable AI mean for the so-called epistemic condition of moral responsibility? Under what conditions can individuals or stakeholders be held accountable for AI-based decisions?

On the other hand, advancements in the mathematical-technical foundations of machine learning systems have enabled explanations and promise to bring further types thereof, suggesting questions such as:

- What kind of explanations are currently available for which systems?

- Which of the desired features do these explanations have and which are still missing?

- What are the expected developments?

- Are there principle limits to advancing explanations as indicated by the well-discussed performance-explainability trade-off?

Along these lines, the present workshop is meant to provide a forum for the exchange between the two strands by renowned experts in the field.

Program

Spring school

Tue, 9.00 a.m. to 5.30 p.m.

Prof. Dr. Benjamin Risse (Institute for Geoinformatics, UM): Unintuitive? Yes. — Intelligent? No! From poorly chosen scientific terminology to superfluous AI questions

Dr. Stefan Roski (ZfW and University of Hamburg): Explanations and Explainability

Dr. Paul Näger (Department of Philosopy, UM): Basics of AI Ethics

- Colloquium lecture -

Prof. Dr. Gitta Kutyniok (LMU, München): A Mathematical Perspective on Legal Requirements of the EU AI Act: From the Right to Explain to Neuromorphic Computing

Workshop

Wed, 9.00 a.m. to 5.30 p.m.

Carlos Zednik, PhD (TU Eindhoven): Disentangling XAI Concepts: Explanation, Interpretation, and Justification

Prof. Dr. Eva Schmidt (TU Dortmund): Reasons of AI Systems

Dr. Astrid Schomäcker (University of Bayreuth): That’s not fair! Explainability as a means to increase algorithmic fairness

Prof. Dr. Kristian Kersting (TU Darmstadt): Where there is much light, the shadow is deep. XAI and Large Language Models

Prof. Dr. Florian Boge (TU Dortmund): Put it to the Test: Getting Serious about Explanations in Explainable Artificial Intelligence

Dr. Thomas Grote (University of Tübingen): The Double-Standard Problem in Medical ML Solved: Why Explainability Matters

Schedule and abstracts are coming soon.

Here you can find the program and abstracts and a poster of the event.